Registered Reports

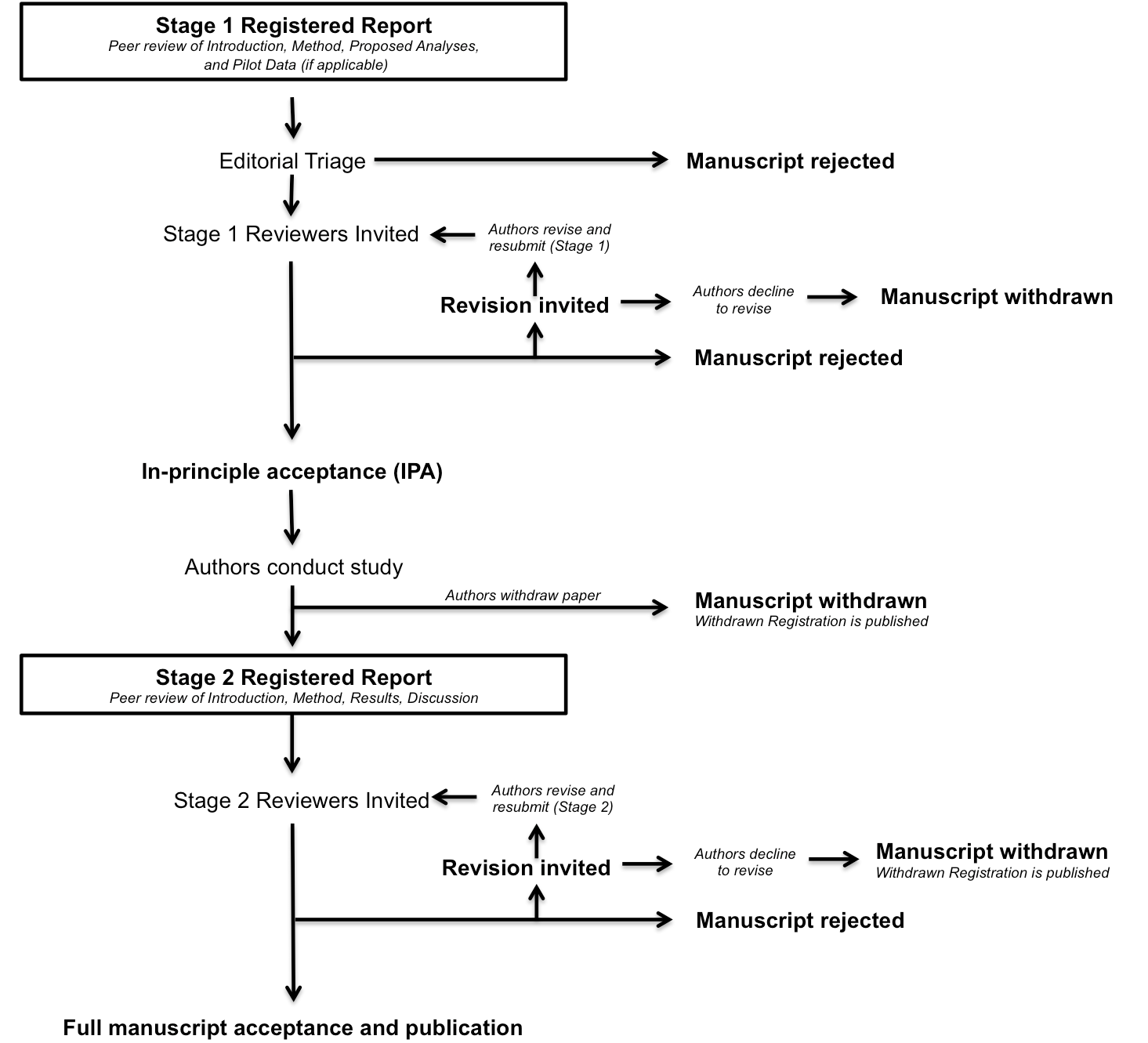

The review process for Registered Reports is divided into two stages. In Stage 1, reviewers assess study proposals before data is collected. In Stage 2, reviewers consider the full study, including results and interpretation.

Figure 1. The review process for Registered Reports (source: Center For Open Science)

Review Process

Stage 1 contains an Introduction and Method (see Figure 1): theoretical background, research rationale, questions and hypotheses, results from pilot studies (if any), methods (design, variables, measures, procedure, sample, power analyses, etc.), planned analyses, and guidelines for the interpretation of possible results. Confirmatory analyses should be powered to at least 80%. Full descriptions must be provided of any outcome-neutral criteria that must be met for successful testing of the stated hypotheses. Such quality checks might include the absence of floor or ceiling effects in data distributions, positive controls, or other quality checks that are orthogonal to the experimental hypotheses. Positive controls are planned design aspects that help validate the approach (e.g., the current manipulations). Positive controls are not possible with all study designs, in which case authors should discuss why they are not included.

Editors may desk-reject the submission. Editors can also send Stage 1 out for review, and if reviews are favourable, the authors can revise the conceptualization and proposed methods. If the reviewers and editor approve a Stage 1 submission, then the authors receive In-Principle Acceptance, meaning that the journal commits to publishing the final manuscript regardless of the results. The first version of the report should be submitted before the data are gathered.

Then, the authors pre-register the approved protocol, which will be published and archived regardless of Stage 2. This pre-registration must include a time stamp (see further guidelines). The point of the detailed Analytic Plan is to demonstrate how confirmatory each analysis is on the spectrum from completely exploratory to heavily constrained and confirmatory. Authors still have the flexibility to report unregistered and thus exploratory analyses in many cases. The most important point is to clearly distinguish what analytic decisions were made when, and to separate any exploratory analyses from the main, pre-registered analyses. A Registered Report thus offers both rigor and flexibility, coupled with high transparency. See recommended standards and preregistration templates. Stage 1 also must contain sufficient materials, measures, manipulations, and in ideal cases cleaning and analysis code to allow reviewers to evaluate the method. GEP strongly encourages authors to preregister Stage 1 at PsychArchives, the public and freely accessible ZPID repository.

Stage 2. After in-principle acceptance, the authors have nine months to submit Stage 2, which includes the Results and Discussion. Extensions can be granted at the discretion of the handling editor (e.g., in case of longitudinal data, otherwise complex projects, or unforeseen events). The implementation of the project, potential data collection, analyses, and results interpretation should be as close as possible to the peer-reviewed and pre-registered Stage 1. Authors need to detail and explain all deviations from the plan.

This Stage 2 submission is also peer reviewed for adherence to the plan and the appropriateness of the interpretation:

- Whether the data are able to test the authors’ proposed hypotheses by satisfying the approved outcome-neutral conditions (such as quality checks and positive controls)

- Whether the Introduction, rationale, and stated hypotheses are the same as the approved Stage 1 submission. The Stage 1 Introduction and Method usually do not have major changes in the Stage 2 paper.

- Whether the authors adhered precisely to the registered experimental procedures

- Whether any unregistered post hoc analyses added by the authors are justified, methodologically sound, and informative

- Whether the authors’ conclusions are justified given the data

Stage 2 review does NOT depend on the significance nor perceived novelty or impact of the results. In case of deviations deemed too strong by the editors or peer-reviewers, an in-principle acceptance may also be revoked; in such cases, the paper may still be publishable but not as a Registered Report. Additional rounds of revision are typical for Stage 2 manuscripts.

Tips for Avoiding Desk Rejection at Stage 1

Many Registered Report submissions are desk rejected at Stage 1, prior to in-depth review, for failing to sufficiently meet the Stage 1 editorial criteria. In many such cases, authors are invited to resubmit once specific shortcomings are addressed, although major problems can lead to rejection. Here are the top reasons why Stage 1 submissions are rejected prior to review:

- The protocol contains insufficient methodological detail to enable replication and prevent researcher degrees of freedom. One commonly neglected area is the criteria for excluding data, both at the level of participants and at the level of data within participants. In the interests of clarity, we recommend listing these criteria systematically rather than presenting them in prose.

- Lack of correspondence between the scientific hypotheses and the pre-registered statistical tests. This is a common problem and severe cases are likely to be desk rejected. To maximize clarity of correspondence between predictions and analyses, authors are encouraged to number their hypotheses in the Introduction and then number the proposed analyses in the Methods to make clear which analysis tests which prediction. Ensure also that power analysis, where applicable, is based on the actual test procedures that will be employed to test those hypotheses; i.e., if there is a feasible method to estimate power for the actual analysis, then use that method.

- Power analysis, where applicable, fails to reach 80%. Ideally, the authors will explain how the design is powered to detect plausible-sized effects and how those effects will inform further research.

- Power analysis is over-optimistic (e.g. based on previous literature but not taking into account publication bias) or insufficiently justified (e.g. based on a single point estimate from a pilot experiment or previous study). Proposals should ideally be powered to detect plausible effects of theoretical value, given resource constraints. Pilot data can help inform this estimate but is unlikely to form an acceptable basis, alone, for choosing the target effect size.

- Intention to infer support for the null hypothesis from statistically non-significant results, without proposing use of Bayes factors or frequentist equivalence testing.

- Inappropriate inclusion of exploratory analyses in the main analysis plan. Exploratory “plans” at Stage 1 blur the line between confirmatory and exploratory outcomes at Stage 2. It is often better to not mention exploratory analyses at Stage 1, and then include them at Stage 2. Under some circumstances, exploratory analyses could be discussed at Stage 1 where they are necessary to justify study variables or procedures that are included in the design exclusively for exploratory analysis.

- Failure to clearly distinguish work that has already been done from work that is planned. Where a proposal contains a mixture of pilot work that has already been undertaken and a proposal for work not yet undertaken, authors should use the past tense for pilot work but the future tense for the proposed work. At Stage 2, all descriptions shift to past tense.

- Lack of pre-specified positive controls or other quality checks, or an appropriate justification for their absence (See Stage 1 criterion 5).

Some of these instructions were gratefully adapted from the Registered Report and Replication Guidelines for Language and Speech.